PeerDAS-devnet-7 Updates

We recently ran PeerDAS through its paces on a modified peerdas-devnet-7 testnet (big thanks to EthPandaOps for leading the spec work!). This devnet was run by Sunnyside Labs, a core development team in the Optimism Collective, to benchmark how well different combinations of execution and consensus layer clients handle blob throughput under stress.

As we build the Superchain, we need data availability that's both reliable and affordable. Every additional blob we can fit in a block brings down the cost of posting L2 data to Ethereum. It’s a win for everyone - users pay less, developers tap into shared liquidity and cross chain distribution, and data heavy applications (like onchain games) can scale without blowing up costs. More activity on the L2 will also drive more transaction fees back to Ethereum, reinforcing its security by providing validators with more earning potential.

This is why we're stress testing blob throughput across the entire client matrix. Benchmarking every consensus/execution pair under controlled conditions helps us surface hidden bottlenecks early and measure the current performance ceiling. It also allows us to stay committed to preserving Ethereum’s stability and performance and ensures that increasing blob capacity does not come at the cost of network health. Our aim is to collaborate with core devs and client teams to turn promising peaks into sustained performance.

In this post, we’ll walk through our benchmark results, highlight the wins, and dig into the stability challenges we uncovered along the way.

If you’d like to back‑read the series, check out previous Sunnyside Labs reports below:

TL;DR: We hit 50 blobs, but found the ceiling

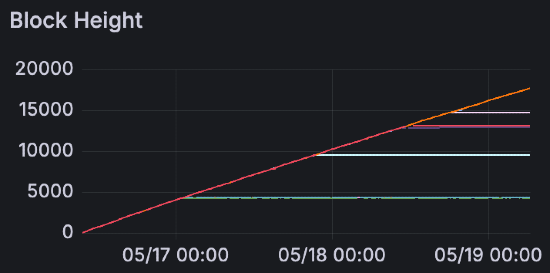

We ran this devnet with 60 nodes to understand how well the network holds up as blob throughput increases. We reached nearly 50 blobs/block consistently with a diverse mix of EL/CL combinations. Beyond this threshold, we started seeing network strain: consensus delays piled up, blob fetching became unreliable, and some supernodes began failing. The pattern was clear with consensus clients struggling with block import delays and missing attestation windows, making it harder for the network to maintain pace under high load.

Environment configuration

This round expanded our setup from 36 to 60 nodes (33 supernodes, 27 full nodes). Each node ran with 4 vCPUs and 8 GB RAM. We tested all major EL/CL combinations using Spamoor to gradually increase blobs per block. Unlike earlier rounds, we didn’t reset the chain between stages and instead continuously ramped up blob load to stress test failure point.

| component | version |

|---|---|

| consensus layer | alpha releases built for devnet‑7 spec |

| execution layer | alpha releases compiled with getBlobsV2 enabled |

| network | modified peerdas‑devnet‑7, 60 nodes, 8 validators, 12 s slots |

| hardware | 4 vCPU / 8 GiB RAM per node digitalocean instances |

| load | spamoor blob generator, monotonically increasing load (no forced resets) |

note: We ran this test from May 17 – 19, 2025. All clients shared identical peer limits and gossip configs. Logs and metrics were piped to a shared Grafana + Prometheus setup.

We ran two parallel devnets with identical topology to spot run‑to‑run variance. Spamoor started at 1 blob / block and added one blob every ten minutes. This allowed us to test network and client behavior under cumulative pressure, capturing the effect of long-term strain on consensus sync, blob propagation, and execution fetch reliability.

Key findings

Blob throughput

Network blob throughput peaked around 50 blobs per block. Previous runs saw only specific client pairs like lighthouse/besu and lighthouse/geth reach 43–50 blobs per block; other combinations typically stalled out between 18–35 blobs before becoming unstable. This time, we were able to reach close to 50 blobs per block consistently, even with a diverse mix of execution and consensus clients (we will continue investigating if this can be attributed to distributed blob building, which was part of the devnet 7 spec).

However, pushing beyond 50 blobs led to performance degradation with slot misses and chain resets, preventing sustained higher throughput. The repeated spike-and-drop pattern we observed suggests that 50 blobs per block represents our current network ceiling under these conditions.

Consensus delays

As blob load increased, consensus delays stacked up: blocks arrived late, imports lagged, and attestations missed their windows. These compounding delays led to missed slots and reduced overall network participation.

We measured three types of delays that became problematic:

- Block arrival latency: How long blocks took to reach nodes

- Block import delays: Processing time (validating and executing a newly arrived block) once blocks arrived

- Attestation timing issues: Missing the window for proper attestations

To reliably exceed the 50 blob threshold, clients must minimize consensus delays and optimize each stage of block processing.

At peak blob counts, we also observed signs of consensus instability like minor re-orgs, inconsistent attestations, and occasional head divergence signaling a strained CL. As a result, some test runs saw sustained throughput capped at around 25–35 blobs per block, well below the peak capacity observed in isolated bursts.

Blob fetching from execution clients degraded noticeably at high blob counts, adding pressure on consensus clients trying to verify and attest blocks. This created a feedback loop where consensus delays made execution layer performance worse, which in turn made consensus delays even more pronounced.

Supernode stability

Supernodes performance degraded at blob count peaks. The crashes only occurred on supernodes when blobs per block hit the 50 blob/block levels, pointing to a reproducible performance weakness under high blob pressure. Lodestar supernodes were the first to fail in our devnet. Their sync process proved unstable under stress, frequently dropping out and falling behind other client implementations (more on this in the next section).

These results highlight a need for more fault-tolerant architecture and improved recovery mechanisms, especially for supernodes operating in high-throughput environments.

Lodestar performance in context

Under moderate load (up to approximately 35 blobs per block), Lodestar maintained comparable performance to other consensus clients. As blob throughput increased beyond this threshold, we observed some stability challenges that offer important learning opportunities for high-throughput scenarios.

Lodestar supernodes showed higher CPU usage, particularly during recovery phases, suggesting intensive catch-up processes.

We also observed elevated RAM consumption alongside spiked inbound network usage, indicating opportunities for optimizing blob and sidecar propagation efficiency.

Sidecar column processing also showed longer verification times, which likely contributed to sync issues. The full runtime of data column sidecar gossip verification was consistently higher for Lodestar nodes across our test.

The ChainSafe team has already opened a PR to improve batch verification of DataColumnSidecars, which we expect will lead to a performance boost: https://github.com/ChainSafe/lodestar/pull/7910.

Why this matters: These findings aren't about declaring winners and losers; they're about identifying specific optimization opportunities. Every client implementation faces unique challenges at scale, and understanding these patterns helps the entire ecosystem improve.

The path forward

Sunnyside’s peerdas-devnet-7 testing confirms that we can approach 50 blobs per block, but reaching and exceeding this threshold reliably will require focused optimizations across clients and network infrastructure. Current bottlenecks include cumulative consensus delays, execution layer fetch lag, and insufficient supernode robustness, all of which must be addressed to ensure stability at scale.

Bottlenecks to investigate:

- Consensus layer delays that compound under sustained high load

- Execution layer fetch lag that creates cascading performance issues

- Supernode robustness under peak throughput conditions

We'll dive deeper into whether (and how) distributed blob building contributed to lifting performance across all client pairs and what this means for sustainable blob throughput. We’ll also analyze why some runs saw sustained throughput capped well below peak capacity and determine whether this was due to network limits, client specific issues, or our load generator configuration.

The ceiling is clearer now - we're approaching a 48-50 blob target and believe we'll be well-positioned for future improvements based on these findings. The Superchain needs predictable, high-throughput data availability, and each step forward in blob performance directly benefits our users and developers.

Until next time! Onwards to cheaper blobs for all ✨